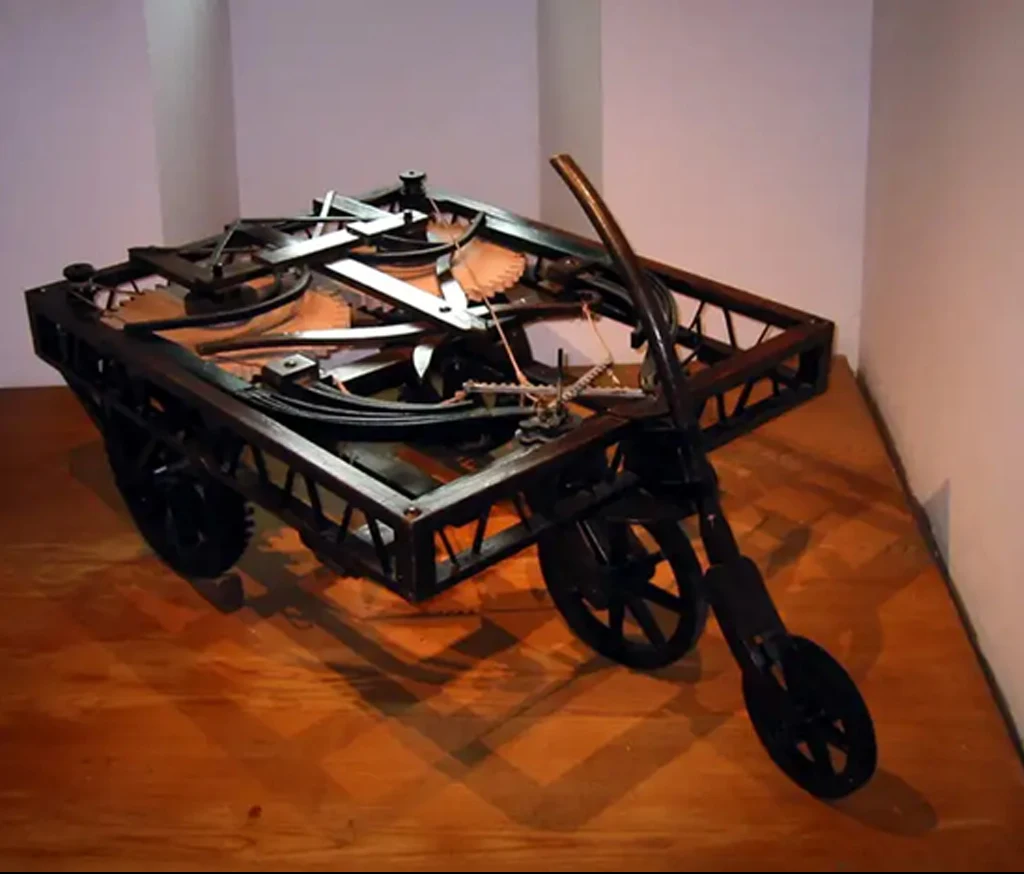

With the advancement of technologies like artificial intelligence, big data, and cloud computing, the global AI automotive market is undergoing profound changes in trends and competitive landscapes. The continuous progress in autonomous driving technology has become one of the main drivers of market growth. The gradual evolution from Level 0 to Level 5, especially in the commercialization of Level 2 and Level 3 autonomous driving technologies, indicates that Level 4 and Level 5 autonomous vehicles will gradually enter the market in the future. Additionally, intelligent connected technologies enable cars to connect to the internet, achieving comprehensive interaction between vehicles, infrastructure, and pedestrians, enhancing driving safety and comfort, and providing users with a more convenient and intelligent travel experience. In the 16th century, Leonardo da Vinci designed a small three-wheeled cart that could move automatically. Someone has rebuilt this autonomous vehicle based on da Vinci’s design drawings, which is now on display at the Château de Clos Lucé in Amboise, France.

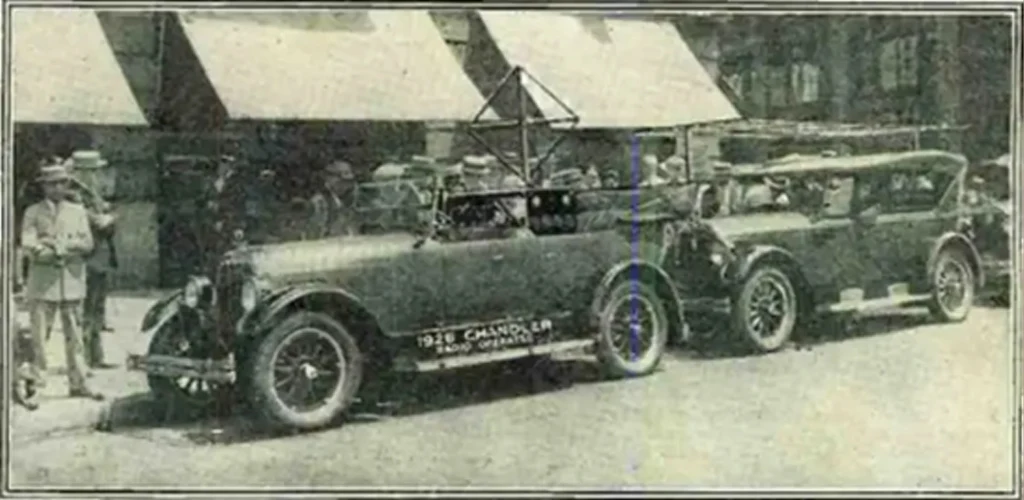

Of course, the “autonomous vehicles” we’re discussing today began to appear only after the first car was born in 1886. In July 1925, the Houdina Radio Control Company showcased a radio-controlled 1926 Chandler sedan on the streets of New York for the first time. As shown in the image, the car had an antenna mounted on its roof and was operated remotely by a person in another car that followed behind.

Sadly, the testing was abruptly halted due to frequent accidents. Clearly, this “autonomous driving” was more akin to a remote-controlled toy, reflecting the early understanding of automation: “there’s no one in the driver’s seat.”

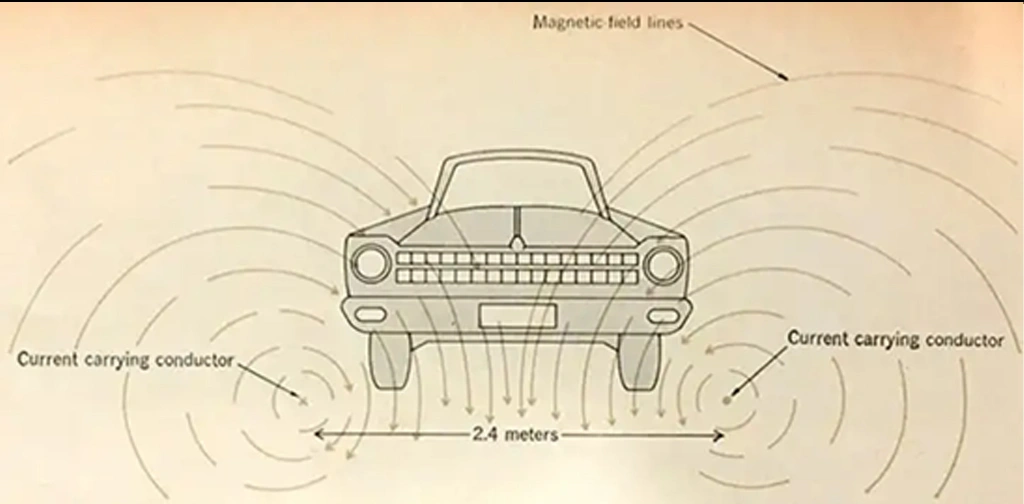

At the 1939 World’s Fair, General Motors unveiled the first true model of an autonomous vehicle. It detected currents in pre-installed wires along the road using sensory coils mounted at the front, allowing it to control the steering wheel and movement through electromagnetic fields. This design was quite advanced, even surpassing today’s electric vehicles (which rely on batteries rather than electromagnetic propulsion). However, it required extensive installation of high-power induction cables on roadways, which was prohibitively expensive, and the routes for the cars had to be pre-designed by humans. So, while several prototype vehicles based on the same principles emerged in the 40s and 60s, they were never really put to practical use.

In 1956, the concept of “Artificial Intelligence” was first proposed at the Dartmouth Conference, marking the first step in humanity’s exploration of machine intelligence.

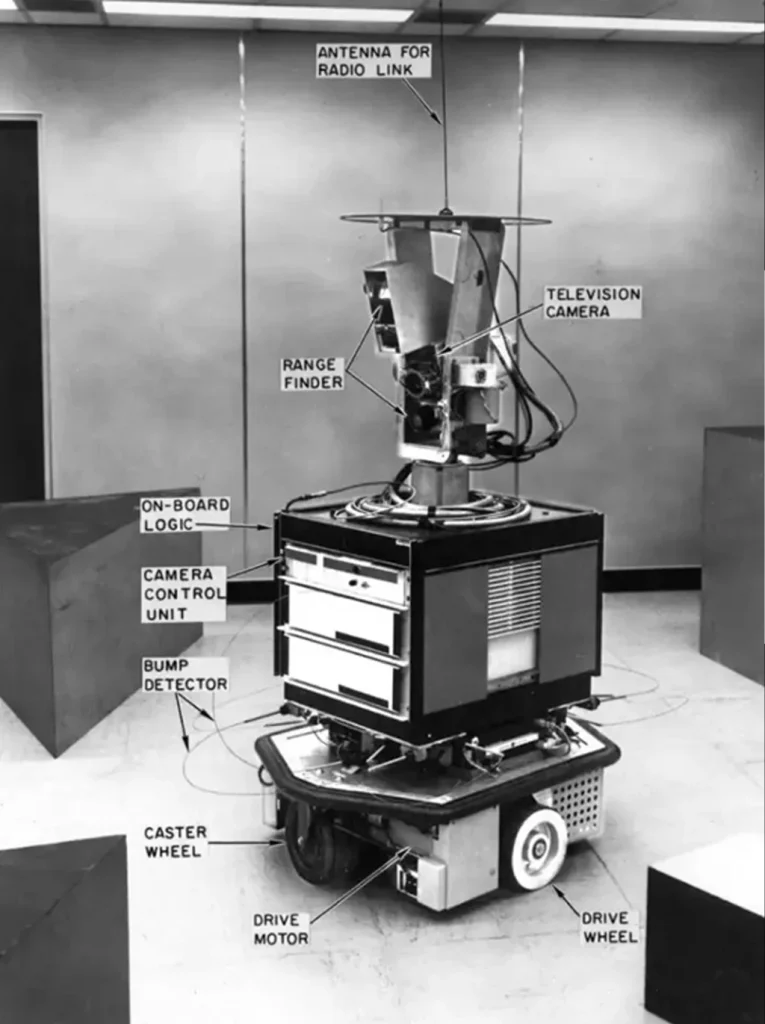

In 1961, James Adams at Stanford University developed the “Stanford Cart,” which is shown in the image below. It was equipped with a camera and could automatically detect and follow specific lines on the ground through programming. This was not only the first use of a camera in an autonomous vehicle but also the starting point for today’s autonomous vehicle visual recognition systems. The design of this vehicle aimed for it to operate on the moon, but it was never put into practical use due to funding issues.

In 1969, Professor Nils Nilsson from Stanford’s Artificial Intelligence Research Center developed a mobile robot called “Shakey.” This was the world’s first robot capable of autonomous movement, endowed with limited self-observation and environmental modeling abilities. The computer controlling Shakey was enormous, taking up an entire room. The purpose of building Shakey was to demonstrate that machines could mimic biological movement, perception, and obstacle avoidance.

After this, research on autonomous vehicles began to accelerate from the 1980s, and the operational speed of vehicles combined with visual systems also started to gradually increase. This began to prompt serious consideration from academia and various sectors of society: once these cars hit the road, would they be safe enough in complex traffic environments?

In the 1980s, the U.S. Department of Defense began supporting research on autonomous vehicles through the Defense Advanced Research Projects Agency (DARPA).

In 1995, Carnegie Mellon University’s NavLab 5 traveled from Pittsburgh to San Diego, California, completing a journey of 2,850 miles, during which it operated autonomously for over 98% of the time.

At this stage, artificial intelligence began to replace traditional methods like remote control or electromagnetic guidance, becoming the mainstream technology for autonomous driving. However, what was termed “autonomous driving” at this point was more about “real-time data collection—rule-based analysis—logical decision-making.” It struggled to achieve real-time self-decision-making and processing in the face of ever-changing road conditions. Additionally, due to limitations in data and computing power, it couldn’t reach higher speeds.

By the early 21st century, as artificial intelligence technology began to enter the “fast lane,” neural networks represented by deep learning showcased a clear advantage thanks to improvements in computing power and data availability, leading to a more mature state of autonomous vehicles.

Starting in 2004, DARPA hosted three “DARPA Grand Challenges” in 2004, 2005, and 2007, offering large cash prizes to encourage the development of autonomous and unmanned vehicles. The first competition required vehicles to navigate 150 miles of desert road, but not a single car reached the finish line. However, in the third event in 2007, with a 60-mile urban environment as the course, four vehicles successfully completed the challenge.

Compared to the first two competitions held in deserts and mountainous roads, the third edition required participating vehicles to comply with traffic regulations and be capable of detecting and avoiding other vehicles. This means that vehicles must make “intelligent” decisions in real-time based on the behavior of other vehicles. This marks the point where AI-based autonomous vehicles began to show real commercial potential.

These research achievements were quickly absorbed and applied by commercial companies. In 2016, Google spun off its self-driving car division to establish Waymo, which became the first company in the world to receive a legal license from the U.S. Department of Motor Vehicles to operate Level 4 autonomous driving (i.e., without a safety driver) commercially on public roads. Of course, a name that many are more familiar with is Tesla. In April 2024, Musk announced that vehicles equipped with its Full Self-Driving (FSD) system had surpassed a total driving distance of one billion miles.

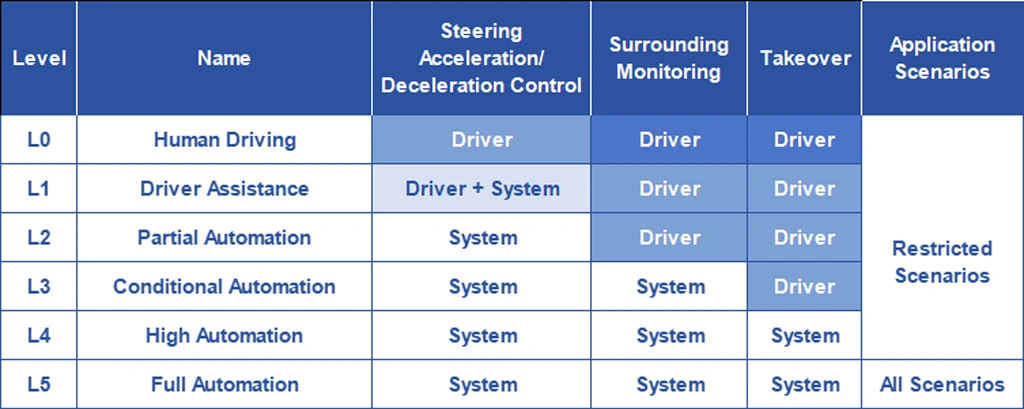

At the same time, standards and regulations related to autonomous driving have been gradually improved. The SAE (Society of Automotive Engineers) updated its definitions of autonomous driving levels in 2016, which are now widely used in industry regulations around the world.

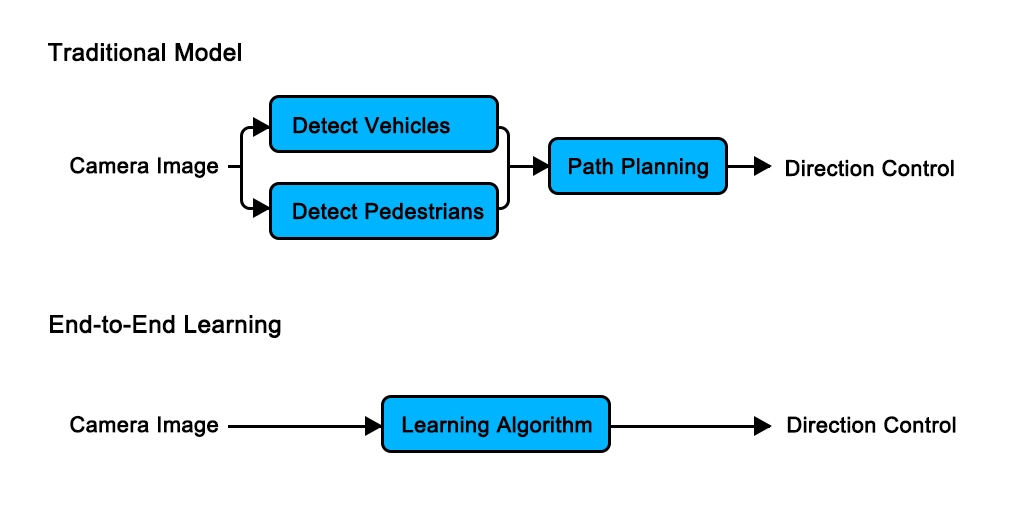

Traditional autonomous driving solutions follow a so-called “modular” approach, which involves breaking down the entire driving task into smaller parts. These parts, or sub-modules, are defined and assigned specific responsibilities, typically including mapping/localization, prediction, decision-making, planning, and control. Ultimately, the entire intelligent driving task is completed through system integration.

The advantage of this method is that each sub-module can be developed and tested independently. Once the development and testing of the sub-modules are finished, they can then be integrated into the overall system for system-level testing and validation.

Although this approach utilizes neural networks (in the decision-making and planning parts), it still heavily relies on pre-defined rules, which guide the vehicle’s driving route based on high-definition maps and traffic regulations, and are manually coded.

In contrast, Tesla’s “end-to-end” model views the entire intelligent driving system as a single large module, eliminating the division of modules and tasks. When the end-to-end system receives input data from sensors, it directly outputs driving decisions, including driving actions and motion paths.

This method is entirely data-driven; the entire system acts as a large model, trained through gradient descent. Gradients are back-propagated, allowing for the optimization of all parameters within the model during training, from input to output. By developing and testing the entire system as one large module, the process of development and testing is significantly simplified.

In other words, the former is still “rule-based,” relying on human-defined rules, while the latter completely treats the vehicle as a “seasoned driver,” “learning” from vast amounts of training data to determine its driving behavior.

From current practice, the “end-to-end” model is gradually becoming the mainstream in the industry due to its flexible adaptability to complex road conditions. However, this requires a tremendous amount of training data and computational power. As a result, automakers have significantly ramped up their investments in artificial intelligence technology, especially in large models.

During Tesla’s first quarter earnings call in 2024, the company revealed that it has expanded its AI training cluster to 35,000 H100 GPUs. By the end of 2024, Tesla plans to invest an additional $1.5 billion into its supercomputing cluster, aiming to boost the total computing power of its supercomputing center to 100 EFLOPS.

As mentioned earlier, early in the development of autonomous driving technology, people began considering guidance through the road to influence vehicle navigation. Today, this concept has evolved into “vehicle-road cooperation” (or “vehicle-cloud cooperation”), which aims to achieve real-time interaction through the connection and collaboration between vehicles and their environment.

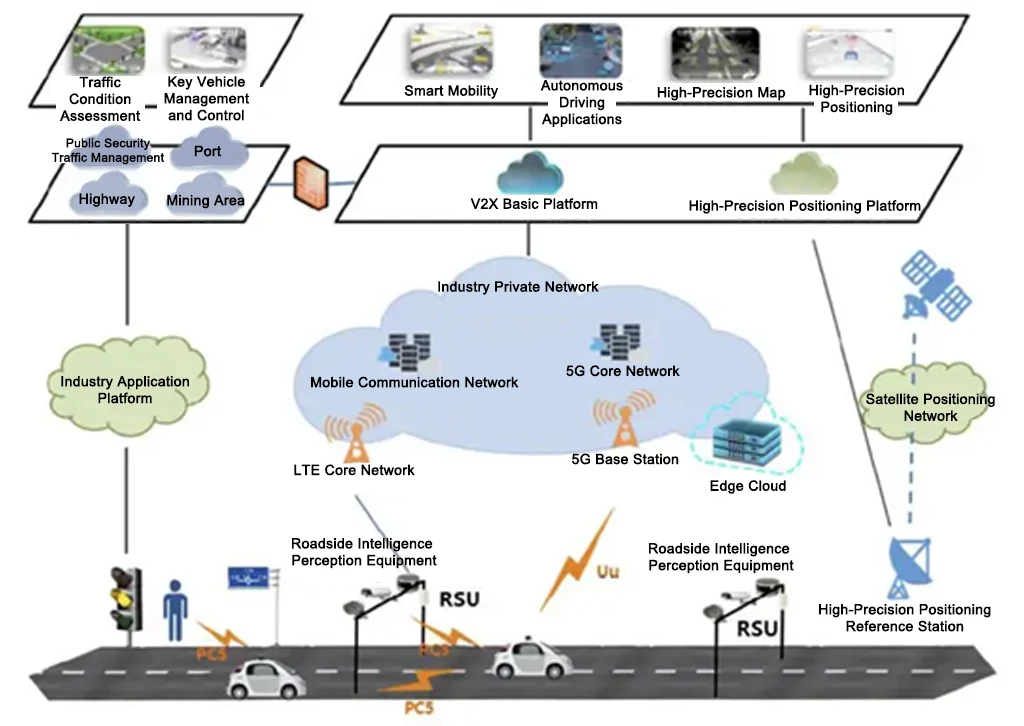

This technology was previously referred to as “Vehicle-to-Everything” (V2X), which connects vehicles with all elements of the environment. The concept of vehicle networking (V2X) originates from the Internet of Things (IoT), specifically the vehicle IoT, which uses vehicles in motion as information-sensing objects. By leveraging the next generation of information and communication technology, it enables connectivity between the vehicle (V) and X (vehicles, people, roads, service platforms). The overall architecture is illustrated in the diagram below, with particular attention given to roadside units (RSUs) that serve as the connection between vehicles and the road.

In contrast, the route led by Tesla is referred to as “single-vehicle intelligence.” The advantage of “vehicle-road collaboration” lies in compensating for the vehicle’s limitations in perception, intelligence, and computing power through the road itself. By utilizing “smart roads,” we enable “smart cars” to “see further, see wider, and see clearer.” After entering 2024, autonomous vehicles are gradually moving towards commercialization.